Causal AI in Finance

Moving beyond correlation to uncover true cause-and-effect relationships in complex systems.

The Challenge

The Limits of Correlation-Based AI.

Standard Machine Learning models are excellent at finding patterns (correlations), but they often fail to understand why those patterns exist (causation). In complex environments like financial markets or biological systems, relying on simple correlation is dangerous because "spurious correlations" often break down when conditions change.

ADIA Lab recognizes that the next frontier of AI is Causal Inference: building models that understand the underlying mechanisms of a system. The challenge is that discovering these causal structures computationally is incredibly difficult (known as "NP-hard") requiring novel mathematical approaches to distinguish a true driver from a coincidence.

Our approach

Mapping the "Causal Graph."

ADIA Lab partnered with Crunch to launch the Causal Discovery Challenge. The objective was to reconstruct the Directed Acyclic Graph (DAG), the mathematical map that defines the direction of influence between variables.

Unlike standard forecasting competitions, success wasn't measured by accuracy, but by Structural Intervention Distance (SID). This rigorous metric quantifies how accurately a model predicts the outcome of an intervention (e.g., "If I change X, will Y actually move?"). This required participants to build algorithms capable of distinguishing true causality from statistical noise across both linear and non-linear systems.

The Solution

Distinguishing Signal from Spurious Noise.

The competition attracted top researchers who utilized a mix of structural equation modeling and deep learning techniques to solve the puzzle.

The Methodology:

- Structure Learning: Participants built models that inferred the direction of influence (e.g., does Rain cause Mud, or does Mud cause Rain?) purely from observational data, even when the variables were highly correlated.

- Robustness Testing: The models were tested on "Out-of-Distribution" data. True causal relationships hold true even when the environment changes, whereas correlations often vanish.

- Graph Recovery: The winning solutions successfully recovered the ground-truth causal graph, filtering out noisy variables that looked predictive but were actually irrelevant.

Some of the top contributions included:

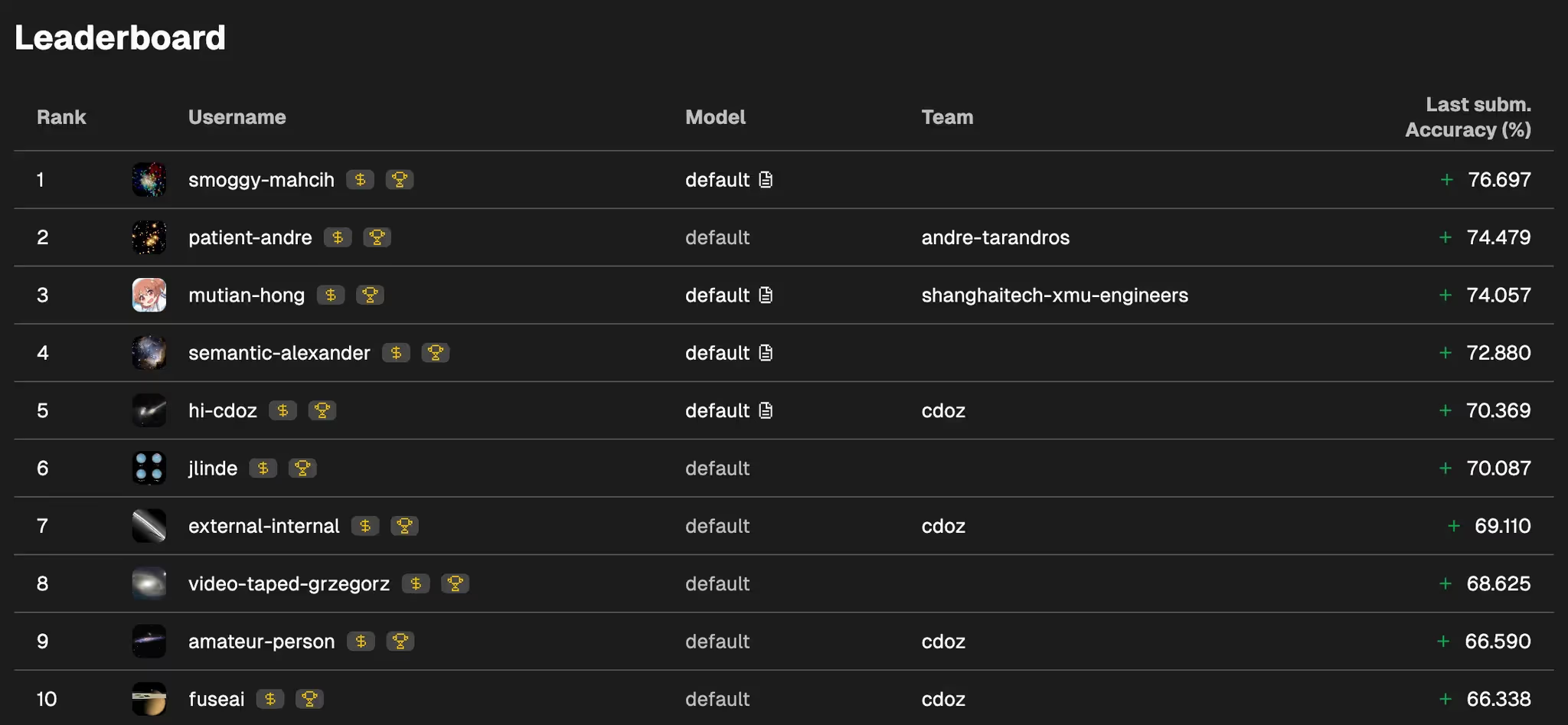

- Hicham Hallak, an engineer from École Centrale developed an end-to-end deep learning solution using graph neural networks where edge features classified nodes. The model achieved 76.7% balanced accuracy by processing scatter plot data through residual convolutional blocks, self-attention layers, and specialized classification heads.

- Mutian Hong from ShanghaiTech University and Guoqin Gu from Xiamen University achieved 74.06% accuracy through extensive feature engineering with innovative data augmentation. Their approach combined correlation-based features, information-theoretic features, causal discovery algorithms (ExactSearch, PC, FCI, GRaSP), regression coefficients, and structural equation modeling outputs.

- Alex KC achieved 72.88% accuracy through extensive feature engineering with 923 features across multiple categories. The approach combined correlation statistics, conditional independence measures, causal discovery algorithms (GES, BES, DirectLiNGAM), and conditional mutual information features estimated using nearest-neighbor approaches. A final LGBM classifier integrated all features to predict causal relationships.

- Hoàng Thiên Nữ reached 70.37% accuracy using polynomial transformations, ANM-based features, and AutoML optimization. The solution employed SMOTE oversampling and 5-fold cross-validation, with AutoGluon achieving superior performance over manually tuned models.

The competition generated production-ready algorithms achieving accuracy scores up to 76.7%, significantly outperforming some traditional methods.

The Impact

Building "Crash-Proof" Models.

This collaboration demonstrated the practical power of Causal AI. By identifying true drivers rather than just correlates, models become significantly more robust to "regime shifts" (market crashes or systemic changes).

Key Results:

- True Driver Identification: The network successfully identified the causal parents of the target variables, separating them from spurious correlates.

- Low SID Scores: The winning models achieved exceptionally low Structural Intervention Distance scores, proving they could predict the effects of interventions with high reliability.

- Scientific Leadership: The partnership cemented ADIA Lab’s position at the forefront of Causal AI research, validating new methods for extracting reliable insights from high-dimensional datasets.