Real-Time FX Pricing

Delivers institutional-grade FX pricing with <60μs latency using ensemble intelligence.

The Challenge

Stale Pricing in Volatile Markets.

In fast-moving FX markets, traditional pricing models often lag behind reality. Internal bank models typically rely on simple heuristics or delayed feeds, generating "point estimates" (e.g., "Price is 1.05") that fail to account for sudden volatility spikes.

When the market moves faster than the model, the price becomes "stale." To protect the bank, risk engines automatically widen spreads. The Result: The bank becomes uncompetitive, corporate clients take their flow to agile Fintechs or Tier 1 competitors, and the desk loses P&L to slippage.

Our approach

Stress-Testing via Real-Time Simulation.

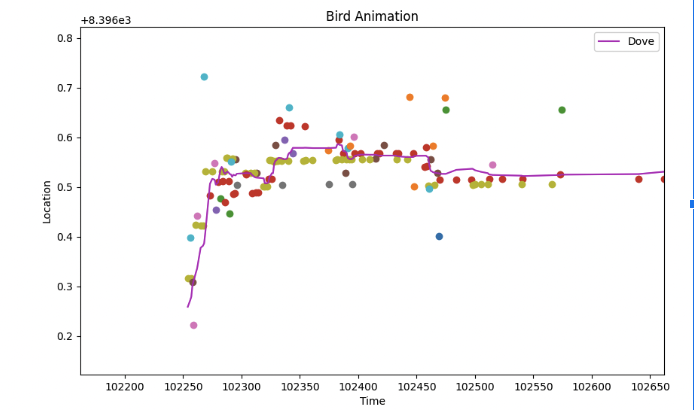

Before deploying into live capital markets, Crunch developed the Falcon Challenge as a rigorous, real-time stress test. By modeling the chaotic flight paths of biological targets (which mathematically mirror the "Levy flight" patterns found in market microstructure), we trained an ensemble of models to predict "probability density" rather than just direction.

This simulation forced models to distinguish between meaningful signal and random noise in microseconds. It shifted the forecasting paradigm from making a single guess to mapping out a risk-adjusted probability map, creating a new generation of adaptive algorithms capable of handling extreme market turbulence.

The Solution

MidOne Pricing Engine & Ensembles.

The technology graduated from the simulation to the MidOne Pricing Engine, an on-premise, ultra-low latency environment.

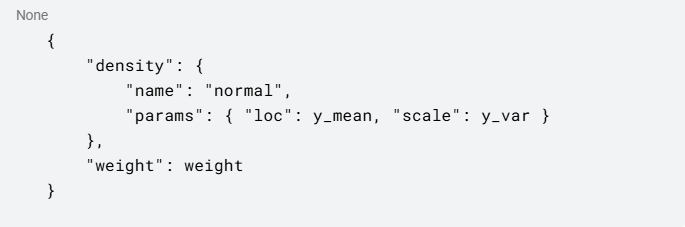

Instead of outputting one "best guess," the engine utilizes a Probabilistic Ensemble. It aggregates thousands of independent model predictions to output a probability distribution (see code snippet) defining the confidence interval of the next tick.

How it works in production:

- Ingestion: Consumes market data via FPGA/Kernel bypass for maximum speed.

- Ensembling: Aggregates 2,000+ predictions instantly to neutralize individual model bias.

- Execution: Outputs a mid-price with a confidence score, allowing the quoting engine to widen or tighten spreads automatically based on real-time risk.

The Impact

Institutional Performance at Scale.

This logic is no longer theoretical, it is deployable today via the MidOne Pricing Engine, giving regional banks and institutional desks the ability to compete on speed and accuracy with the world's largest market makers.

Deploying this ensemble intelligence delivers three core business outcomes:

- <60μs Latency: The architecture is optimized for C++, delivering inference speeds fast enough for High-Frequency Trading (HFT) environments.

- Spread Compression: By accurately predicting the "cone of uncertainty," desks can quote tighter spreads to win more flow without increasing risk exposure.

- Toxic Flow Avoidance: The model identifies "predatory" volatility spikes milliseconds before they happen, allowing the desk to skew pricing defensively and avoid holding toxic inventory.

Watch the full tutorial 👇